Years ago, I was leading tech recruiting at a big company, and I was deep into a conversation about cheating. We were concerned that a candidate we had interviewed for a software engineering role had cheated on an assessment — specifically, the phone screen and take-home coding test. The big questions were whether the candidate had searched the internet to find some correct answers and leveraged someone else’s code for some of the asynchronous work.

Granted, if a candidate cheated by leveraging someone else’s work, it’d be pretty easy to figure that out in an old-school in-person interview or a “walk me through your work” follow-up screen-share interview. Or, for in-person, you could test them using a whiteboard or giving them a laptop and asking them to do the work in front of you. But this conversation was not just about one candidate. It was part of a larger conversation on our “do it at home” assessment and what cheating meant in a world where so much information was at a candidate’s fingertips.

There were two points of view in the conversation. One was, “If he cheated, we want nothing to do with him. That’s a strong no-hire, for sure!” The other was, “Is it really cheating if he used the resources he’d have available to him in the job anyway? It’s not like we don’t let engineers use the internet, and if he can leverage a code repository to find the correct answer quickly, isn’t that a skill we’d want?”

As we leveled up the conversation to our overall process and principle around cheating, the questions became about integrity and disclosure (did the candidate volunteer that they found some answers and code online, or did they claim it was all their own work?), and whether we set appropriate boundaries prescreening around what resources they could and couldn’t use. (If we didn’t say they couldn’t do X, then can we blame them for doing X?)

As a parent with two teenagers, married to someone who worked as a test proctor in a high school, I was also worried about what cheating looked like in school. I mean, did anyone not use Wikipedia for book reports in the 2010s? And now, with all the AI apps from the big tech companies, would anyone in school with access not use those as well?

Enter AI

Most of the major tech players have come out with incredible AI tools that operate as copilots. They can generate content so quickly, on all kinds of topics. They can write pretty good code. And they can even coach you on how to interview — real time, during the video interview, in a way that may not be evident to the interviewer or recruiter.

As talent leaders, I think we need to lead a conversation around cheating.

If you’re a candidate, is it cheating to have an AI copilot:

- write your resume and tailor it to the specific job requirements for the req you’re applying to?

- research the company and generate the questions you’ll ask the hiring manager and recruiter?

- prep you for the interview by generating answers you can memorize or read during the video interview?

- prompt you with tailored responses to behavioral and technical interview questions?

- generate solutions to take home assessments (or even complete part of the assessment for you)?

- write the thank-you email to the hiring manager, tailoring your note so that it highlights your strengths relative to the job needs and any concerns shared about your background during the interview?

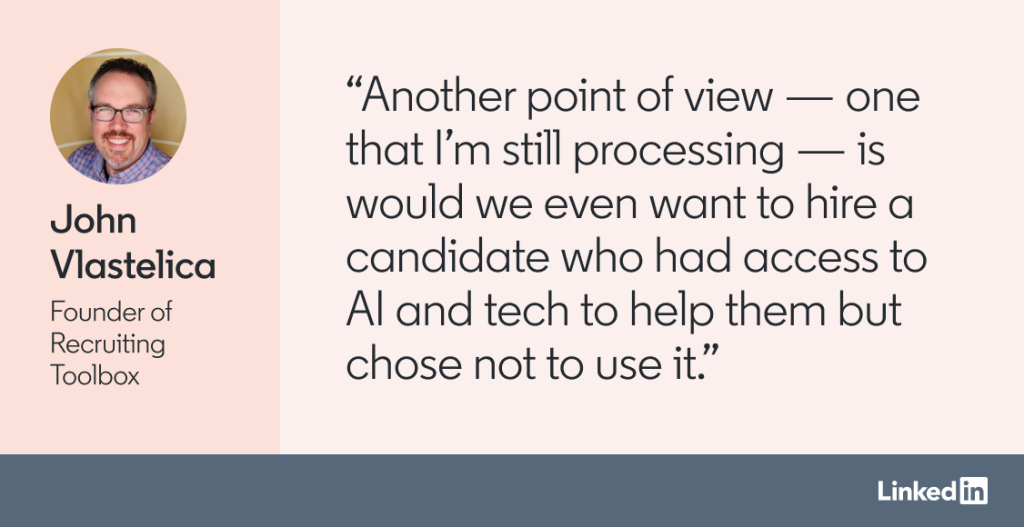

Another point of view — one that I’m still processing — is would we even want to hire a candidate who had access to AI/tech to help them but chose not to use it. In other words, not using AI to prep for and complete your assessment may actually be a negative, in the same way that not using spell-check or a calculator — and misspelling words or wasting time doing manual long division — would be viewed as a negative by many interviewers and hiring managers today.

This is not a straightforward issue

I’m not sure there’s an obvious answer to what’s cheating and what’s not. I think it’ll depend a bit on your view as the recruiter or hiring manager, the job/role and the risks of hiring someone who can’t do what they demonstrated they could do in the screening process, what kind of assistance the candidate received, and whether they disclosed that they leveraged AI to help them.

My personal view is that if someone was asked to do something independently, and expectations were set that they were not to leverage outside knowledge or get assistance from AI or a colleague, and then we find out they didn’t leverage their own work and didn’t disclose that it wasn’t their own work, that’s a strong no-hire from me.

But the bigger issue is what’s cheating in 2024 and beyond? We’ve all used spell-check, calculators, XLS formulas, PowerPoint templates, and maybe even data visualization tools to make our charts look better. And, for developers, it’d be unusual and super-inefficient not to use code from code repositories. Those things have all been pretty normalized as not cheating for years now.

We need a company-wide point of view

I’m worried, though, that companies don’t have a point of view on this. I’m worried that we’re letting each recruiter or hiring manager or interviewer decide what cheating is — and isn’t. And that candidate A may get hired but candidate B doesn’t get hired — both equally qualified, both using AI to assist them — because of a decentralized “it’s up to each hiring team” approach.

We need a company-wide point of view.

I’m especially concerned about false negatives — missing out on someone who would have been good, but was disqualified because we didn’t communicate what’s OK and not OK to use when prepping for and completing the interview process.

And I’m also concerned about hiring unqualified people who cheat. Interview lies and fraud have existed long before the internet, but it’s now easier than ever for a candidate to cheat and show up more qualified than they really are, with a high risk of hiring someone who is both low integrity and unqualified to do the work. The recipe of faked resumes/profiles + automated company screening + pre-interview, asynchronous assessments + remote interviews + remote work — all with AI assistance — should have us all a bit nervous about identity verification and skills verification.

Now, I’m not negative on tech. It’s a great time to be alive. And I love the AI I’ve seen so far. I do think the role of the recruiter has been evolving — and will evolve quickly in 2024 and 2025 — as AI is embedded into all of the tools we use.

And I’m not worried that this issue has already exploded. It hasn’t. Yet.

The business needs our leadership on this

We have an incredible opportunity to play a bigger leadership role around AI and assessment and fairness.

Tech is — or soon will be — forcing companies to articulate a point of view on what cheating is and isn’t. (Side note: This same discussion is happening in elementary and secondary schools as well as universities. I had a conversation a few months ago with a department chair in the school of business in a regional university, and they’re trying to decide if they’ll need to move to live, old-school, pen-and-paper tests or oral, no-tech-allowed tests like it’s the 1960s!)

You may say, but John, I’ve already got an opinion on this. I think X. Great. The question is, though, whether your opinion X is shared across your talent team, across the hiring managers, across the interviewers, across HR, and across your executives.

We do a lot of work helping companies define their hiring bar (we’ve done this work for all kinds of companies, from LinkedIn to Uber to EA Games to Starbucks). Starting now, we’ll help companies articulate a company-wide point of view on what is and isn’t cheating.

This is a call to action — not a call to panic. Have the conversation with your boss and with key execs. Get a sense of your principles around candidate assessments and remember to consider access and fairness (not all candidates have access to AI tools, just like not all candidates have access to career coaches and university test prep courses). And then propose some principles, socialize them, get buy-in, and embed them into your hiring manager training and recruiter training.

...

Source > LinkedIn